As part of the EU Erasmus+ eAssessment project which I have reported about previously on this blog, we are developing a 'toolkit, aimed at supporting teachers and trainers in Europe in innovating with assessment.

We have divided up the work between the project partners and I agreed to write the section of eAssessment and pedagogy. I finished the draft this week, with, typically, it taking me twice as long to write as I had expected. This is work in progress and now I am looking for feedback, before it is translated into the five project partner languages and published on the web. So please if you have any criticisms of what I have put in and perhaps even more importantly what I have left out, please leave a comment below.

eAssessment and Pedagogy

In the last section, we looked at drivers for change in assessment from an operational perspective. In this section we will focus on pedagogic approaches to eAssessment. Reconstructing experiences

As Mike Mirilis[4] has said in writing about assessment in higher education, “many of the innovations in the design and implementation of assessment mark a departure from standardised tests or examinations, moving towards a broader notion of assessment for learning, enhancement of learning for the individual, engagement with the student during assessment, and the involvement of teachers in the assessment process. The purpose of assessment, therefore, not only serves the purposes of selection or certification, rather it involves participants’ own perspectives in reconstructing their experiences as they learn and undertake assessments.”

Formative assessment

The distinction between the different purposes of assessment is important. Formative e-assessment concerns the use of technology to support the iterative process of analysing information regarding student learning and its evaluation in relation to previous attainment of learning outcomes[5]. In formative assessment or assessment for learning, evidence is used to provide developmental feedback to learners on their current skills and knowledge relative to a defined standard. This information may also be used by trainers so that instruction may be modified to suit learner needs.There seems to have been a marked turn towards more formative assessment in vocational education and training in many European Countries. This may be because of the widespread adoption of competence-based VET and also because of the importance given to skills. Rather than just measuring vocational learners’ knowledge in an occupational area, technology can be used to record their practice, primarily as a basis for improvement, with competence statements providing a rubric for measuring those skills. This can take place in training centres but also in work especially for those pursuing apprenticeships.

Diagnostic assessment

In diagnostic assessment, e-assessment resources and materials are used to identify candidate’s strengths and areas for improvement. This form of assessment often occurs at the commencement of a training program.

Summative assessment

Summative e-assessment offers evidence of students’ achievement, what they know, understand, and can do, by assigning a value to their demonstrable achievements. In summative assessment or assessment of learning, e-assessment resources and materials are used in gathering evidence and making decisions about the competence of the learner.

Assessment as Learning

The UK Jisc has also recently identified another purpose of assessment - assessment as learning[6]. Here, the main purpose of the assessment process is to provide learning opportunities for learners. Assessment as learning is a learning experience where the formative and summative elements work well together. Tasks appear relevant, students can see what they have gained by undertaking the activity, they feel involved in a dialogue about standards and evidence and the continuous development approach helps with issues of stress and workload for staff and students.This may become more important as personalised assessment (where the difficulty of the assessment activities is automatically calibrated against the learner’s competence and performance) is integrated within online learning systems.

High and low-cost assessment

Another distinction frequently made in discussing assessment is between high-cost and low-cost assessment. Essentially, this refers to the consequences resting on the outcomes of the assessment. Formative assessment is generally seen as low cost, failure is used to show the need for more opportunities for learning. On the other hand, end of course summative assessment is usually high cost if the ability to progress to employment or further training rests on the results.

Authentic assessment

In this short section, Shane Sutherland from PebblePad ePortfolio explains what authentic assessment is[7].

There are plenty of academic articles about what authentic assessment is – and it can get pretty complicated. But there’s a simple explanation to be had: authentic assessment relates to what students experience in the real world. Instead of testing students’ proficiency in completing tests, authentic assessment methods are designed to assess knowledge and test how students apply that knowledge in real world situations.Indeed, we’ve seen that the extent to which an assessment demonstrates the purposeful application of knowledge in practice is increasingly more important than knowledge recall. In short – knowing ‘stuff’ is important, but knowing how to apply that ‘stuff’, in different contexts, is invaluable.Importantly, authentic assessment mechanisms give students the ability to focus on how they solve problems. In an exam situation, a student might correctly answer a question – but that doesn’t mean they did it purposefully – or that they could reach the same answer again. Instead, authentic assessment, which includes the opportunity for reflection, allows students to show their ‘workings out’. And importantly, they can decide what they’d do better or differently in future – allowing for continuous improvement.The good news is that there are plenty of educators which are already harnessing authentic assessment to help develop skilled, capable – and importantly confident – students and graduates. Over the last year (although admittedly hamstrung by Covid), we’ve seen a significant increase in simulations, projects, work placements, and workplace assessment – all of which bears witness to its growing importance in assessment design.

Of course, it’s probably true to say that VET has always had one eye on authentic assessment through providing real life tasks for students in many occupational areas.

Reliability and validity

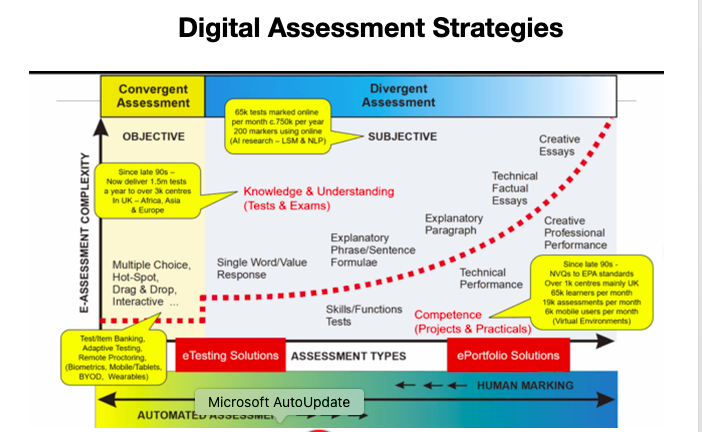

A Jisc and City and Guilds webinar held in early 2022 focused on assessment for learning and the potential to augment or improve reliability, validity, and efficiency impact[8]. The webinar was one of a series intended to drive forward the sector conversation around the future of assessment, challenging existing practice and highlighting opportunities for and the affordances of technology enhanced assessment and learning. Patrick Craven from City and Guilds pointed out that different approaches to eAssessment could support more reliable assessment and / or more valid assessments. There remain constraints in terms of efficiency: very reliable and valid systems were not always efficient. Conversely reliable and efficient systems like multi choice questions often delivered lower validity. The use of technologies for assessment is largely dependent on what the purpose of the assessment is and the nature of what is being assessed (see Diagram 1: Digital Assessment Strategies).

Diagram 1: Digital Assessment Strategies (Patrick Craven) Good Learning, Teaching, and Assessment practice

In a recent publication,[9] UK Jisc put forward seven principles of good learning, teaching, and assessment, why they are important and how to apply them.The principles are:

Help learners understand what good looks like by engaging learners with the requirements and performance criteria for each task

- Support the personalised needs of learners by being accessible, inclusive, and compassionate

- Foster active learning by recognising that engagement with learning resources, peers and tutors can all offer opportunities for formative development

- Develop autonomous learners by encouraging self-generated feedback, self-regulation, reflection, dialogue, and peer review

- Manage staff and learner workload effectively by having the right assessment, at the right time, supported by efficient business processes

- Foster a motivated learning community by involving students in decision-making and supporting staff to critique and develop their own practice

- Promote learner employability by assessing authentic tasks and promoting ethical conduct.

In an earlier report from 2020, “The future of assessment: five principles, five targets for 2025”[10], Jisc set five targets for the next five years to progress assessment towards being more authentic, accessible, appropriately automated, continuous, and secure.

- Authentic Assessments designed to prepare students for what they do next, using technology they will use in their careers

- Accessible Assessments designed with an accessibility-first principle

- Appropriately automated. A balance found of automated and human marking to deliver maximum benefit to students

- Continuous Assessment data used to explore opportunities for continuous assessment to improve the learning experience

- Secure Authoring detection and biometric authentication adopted for identification and remote proctoring

The Australian National Quality Council[11], has outlined the added value they see of e-Assessment from the perspective of learners:

- Improved explanation of competency requirements – examples include the use of forums, blogs, virtual classrooms, video streaming and voice over internet protocols (VoIP).

- Gaining immediate feedback – examples include the use of virtual classrooms, online quizzes and LMS.

- Improved opportunities for online peer assessment – examples include the use of email, wikis, blogs, voice boards, virtual classrooms, and VoIP

- Increased opportunities for self-assessment – examples include use of digital stories, wikis, blogs and online quizzes.

- Improved feedback by including links to online support materials – examples include the use of LMS and virtual classrooms.

Design for eAssessment

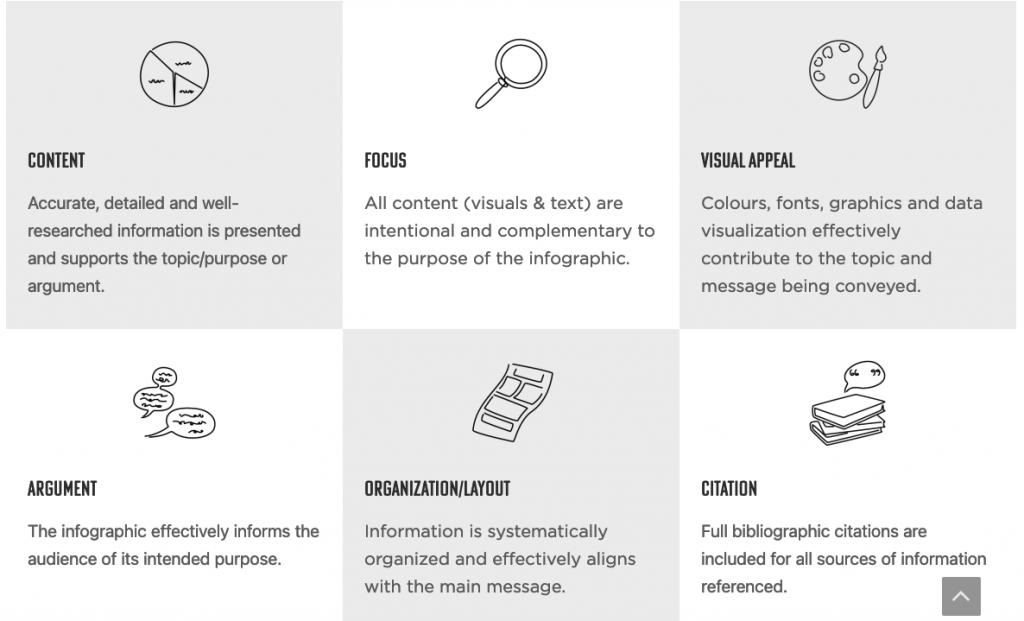

Rubrics are commonly used in designing assessment. Rubrics can be holistic or analytical. Ruth Martin provides examples to explain the difference.

For example, imagine your students have to create a timeline. In the rubric you create to evaluate it, you’ll need to define which aspects will be assessed (e.g., presentation, spelling, resources used, etc.) and the different levels of achievement.These levels of achievement can also be called assessment indicators. They can take the form of traditional school grades (A, B, C, etc.), or you can use terms such as excellent, good work, could be better, etc. This is an analytical rubric.

A lot of the interest in rubrics is because they are seen as making assessment (and particularly grading) more objective and transparent. But rubrics can help to select and plan approaches to using technology of assessment. Jaclyn Doherty[12] explains that Universal Design for Learning principles suggest providing learners with multiple ways to interact with content and show what they’ve learned.

For example, you could provide learners with the choice to create an infographic, a video or a written essay to explain a concept. As long as the learning outcome still aligns with the type of assessment, the approach learners take to get there shouldn’t matter.

This particular web page is all about using infographics for assessment and provides a walk through of how to do it. But her assessment rubric (Figure 3, above) shows that this design process can be simple and straightforward. In fact, it may be that the skills and competences to design such simple rubrics is key to using technology for assessment.

Issues and barriers to the use of e-Assessment

In this section, we will look at some of the issues and barriers to using e-Assessment in vocational education and training.

The role of teachers and trainers

In research undertaken in VET into the European DigCompEdu Framework[13] for educators' competence in using technology for learning, it was found that the area that teachers and trainers felt least confident and competent was assessment. Further research undertaken through the Erasmus+ e-Assessment programme suggests those feeling most confident are teachers and trainers who have completed both initial training and have participated in professional development in the use of technology for assessment. This is important especially because if Formative Assessment is to be extended as a pedagogical approach, it will require teachers and trainers to be actively involved in designing assessment activities.But there is another side to this issue, that of control. Assessment regulations vary greatly in different European countries. The issue here is the opportunities that teachers and trainers have to design their own assessment rubrics. Competence statements and assessment requirements may be determined at many different levels from government down. Examination and qualification organisations may determine assessment requirements, as may industry and training bodies or even institutions at a management level. Putting it simply, even if they wish to develop innovative approaches using e-Assessment, teachers and trainers may not be allowed to do so.

On the other hand, most teachers and trainers can develop formative assessment approaches as part of their teaching and learning. Using technology for formative assessment offers not just an opportunity for innovation, but at the same time an opportunity to improve teaching and learning in vocational education and training.

Infrastructure

Infrastructure remains a barrier to the use of technology for assessment. It is not so much an issue of access to computers - nearly all trainees carry a modern and powerful mobile phone these days or indeed even an issue of access to e-Assessment applications - after all it is possible to use common productivity applications or to develop assessment exercises using the excellent H5P[14] open-source software which is now integrated in the Moodle VLE as well as common content management systems.Rather it is a question of intercompatibility of systems and especially of data entry, storage and sharing.

Things may be improving. In a recent report, the UK Jisc say that although "Previous research found good practice was often difficult to scale up because it required manual intervention or tools that were not interoperable” examples provided in their latest report "show innovative practice delivered at scale and using open standards to facilitate seamless integration with existing tools and administrative systems.[15]"

Proctoring

Virtual proctoring is proving very controversial. Proctoring is linked to the widespread concern that students are cheating in online exams, be it through the use of essay mills or the issue of proving the identity of the person undertaking an online assessment.

The EU-funded TeSLA project—Adaptive Trust-based e-Assessment System for Learning[16] has developed a proctoring system which combines the instruments developed by several institutions and companies that are part of the project consortium. Biometric instruments are used to authenticate students, while the instruments that analyse text are employed in verifying authorship. Some textual analysis instruments like writing style (Forensic) analysis can also be used for authentication purposes. There is a major concern that such approaches are intrusive of users’ privacy. There are also questions about the accuracy and efficacy of systems which are using AI as the basis for decision making. And clearly such systems provide a major barrier to many students with disabilities.

Ethics and Security

The question of proctoring raised above is just one of the many issues around ethics posed by the increasing use of technologies including Artificial intelligence in education. This will be discussed at more length in a following section of this toolkit. And of course, in addition to issues of ethics, the increasing use of data, including data for assessment raises both the questions of data ownership but also of data security.

References

[2] Patrick Craven, History and Challenges of e-assessment. The 'Cambridge Approach' perspective - e-assessment research and development 1989 to 2009, https://www.cambridgeassessment.org.uk/Images/138440-history-and-challenges-of-e-assessment-the-cambridge-approach-perspective-e-assessment-research-and-development-1989-to-2009-by-patrick-craven.pdf

[3] National Quality Council (2011) E assessment guidelines for the VET sector, Australian Government Department of Education, Employment and Workplace Relations, https://www.voced.edu.au/content/ngv%3A46939

[4] Mike Mimirinis (2018) Qualitative differences in academics’ conceptions of e-assessment. https://repository.uwl.ac.uk/id/eprint/7087/1/Mimirinis_2018_AEHE_Qualitative_differences_in_academics%E2%80%99_conceptions_of_e-assessment.pdf

[5] Pachler, N., Daly, C., Mor, Y. & Mellar, H. (2010). Formative e-assessment: Practitioner cases. Computers & Education, 54(3), 715–721

[6] Jisc (2022) Principles of good assessment and feedback, https://www.jisc.ac.uk/guides/principles-of-good-assessment-and-feedback[7] Shane Sutherland (2022) The road to authentic assessment – how universities can harness the practice in the year ahead

[8] Assessment for Learning: Digital Innovation, https://beta.jisc.ac.uk/events/assessment-for-learning-digital-innovation#event-resources

[9] Jisc (2022) Principles of good assessment and feedback, https://www.jisc.ac.uk/guides/principles-of-good-assessment-and-feedback

[10] Jisc (2020) The future of assessment: five principles, five targets for 2025, https://www.jisc.ac.uk/reports/the-future-of-assessment

[11] National Quality Council (2011) E assessment guidelines for the VET sector, Australian Government Department of Education, Employment and Workplace Relations, https://www.voced.edu.au/content/ngv%3A46939

[12] Jaclyn Doherty (2020) Infographics for Assessment, https://learninginnovation.ca/infographics-for-assessment/

[13] Digital Competence Framework for Educators (DigCompEdu) https://joint-research-centre.ec.europa.eu/digcompedu_en

[14] https://h5p.org/

[15] Jisc (2022) Principles of good assessment and feedback, https://www.jisc.ac.uk/guides/principles-of-good-assessment-and-feedback[16] http://tesla-project.eu