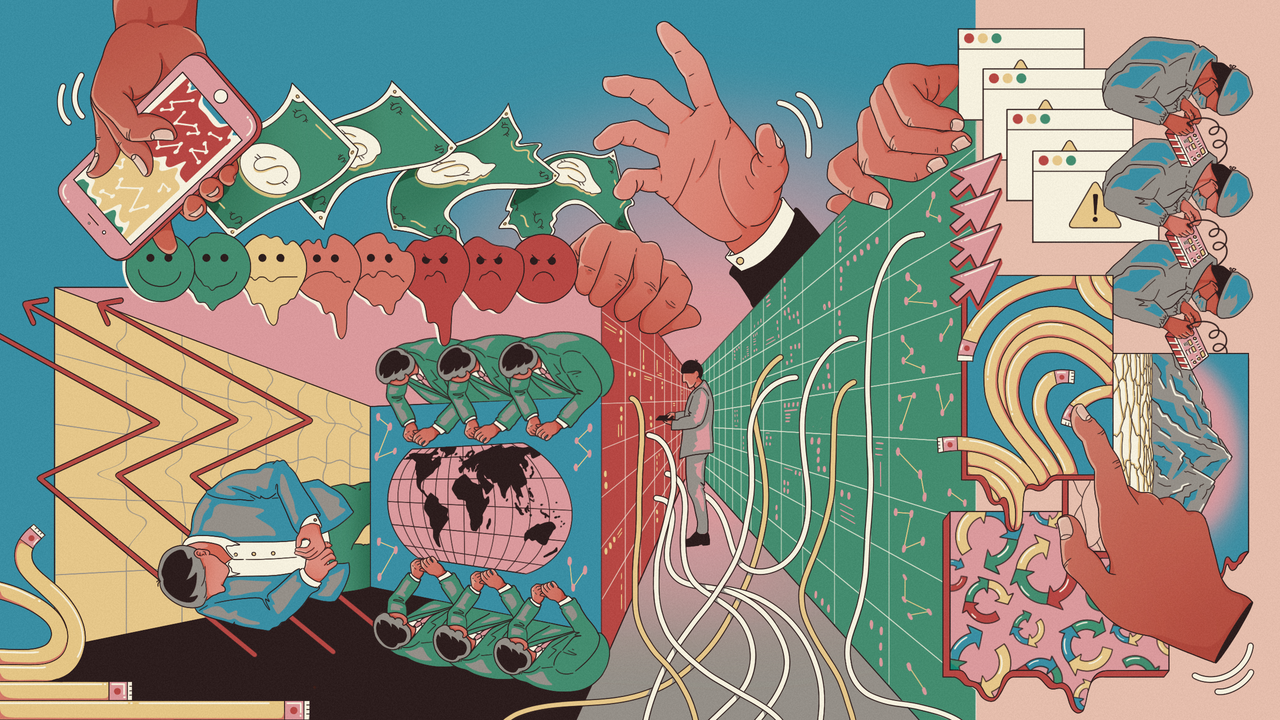

Interesting article in the UK Guardian newspaper today, illustrating both how people have failed to cope with digital technologies and computers in the past, how they might be failing now and how Generative AI is impacting and often challenging wider areas of society.

The background is a long running scandal following the convictions of more than 700 sub-postmasters prosecuted on the basis of 'evidence' of fraud from the Horizon software system, developed for teh UK Post Office by Fujitsu. The Post Office and the software company had falsely denied the possibility of remote access to the system. Around 700 of the more than 900 prosecutions resulting from the scandal were led by the Post Office, with others carried out by other bodies, including the Crown Prosecution Service.

Some sub-postmasters caught up in the scandal have died or taken their own lives in the intervening years with many forced to repay the supposed financial deficit the system reported.

The issue has become prominent after an ITV television drama Mr Bates vs the Post Office.

Now, hundreds wrongly convicted in the Post Office scandal could have their names cleared this year, after emergency laws were announced to "swiftly exonerate and compensate victims".

The Guardian article points to the legal background to how such a scandal could happen

In English and Welsh law, computers are assumed to be “reliable” unless proven otherwise. The Guardian explains:

The legal presumption that computers are reliable stems from an older common law principle that “mechanical instruments” should be presumed to be in working order unless proven otherwise. That assumption means that if, for instance, a police officer quotes the time on their watch, a defendant cannot force the prosecution to call a horologist to explain from first principles how watches work.

They quote Stephen Mason, a barrister and expert on electronic evidence who says this approach reverses the burden of proof normally applied in criminal cases.

It says, for the person who’s saying ‘there’s something wrong with this computer’, that they have to prove it. Even if it’s the person accusing them who has the information.

The article goes on to quote Noah Waisberg, the co-founder and CEO of the legal AI platform Zuva who thinks the rise of AI systems made it even more pressing to reassess the law.

With a traditional rules-based system, it’s generally fair to assume that a computer will do as instructed. Of course, bugs happen, meaning it would be risky to assume any computer program is error-free.

Machine-learning-based systems don’t work that way. They are probabilistic … you shouldn’t count on them to behave consistently – only to work in line with their projected accuracy.